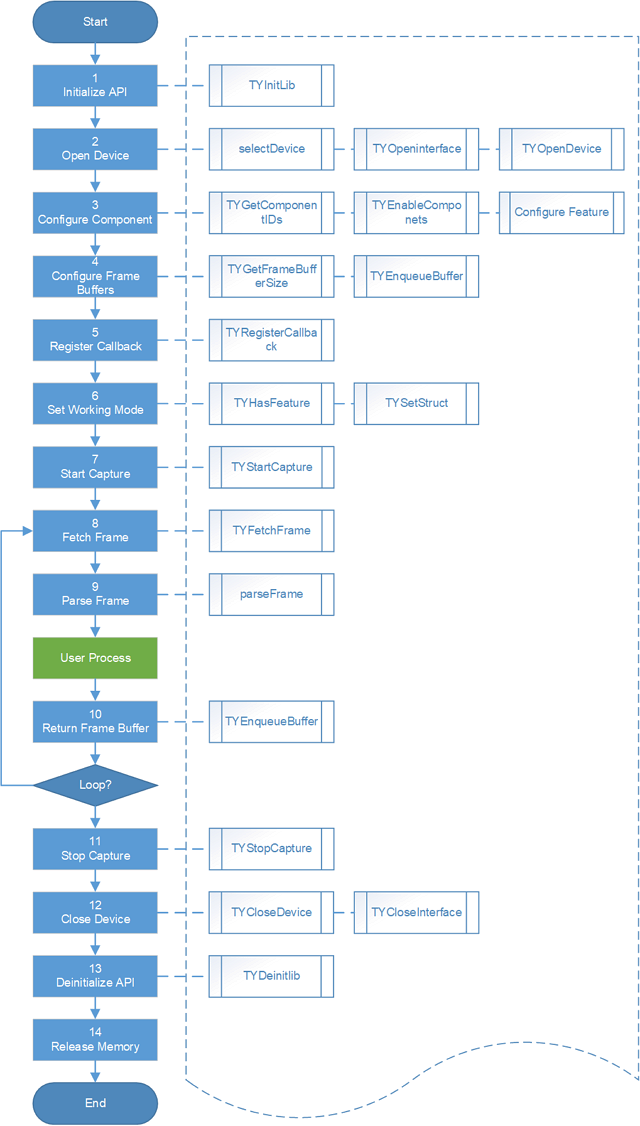

Image Acquisition Process

The configuration and image acquisition process for the depth camera is illustrated below. The sample program of the C++ SDK SimpleView_FetchFrame is used to detail the image acquisition process.

Image Acquisition Process

Initialize APIs

TYInitLib initiates data structures such as device objects.

// Load the library

LOGD("Init lib");

ASSERT_OK( TYInitLib() );

// Retrieve SDK version information

TY_VERSION_INFO ver;

ASSERT_OK( TYLibVersion(&ver) );

LOGD(" - lib version: %d.%d.%d", ver.major, ver.minor, ver.patch);

Open Device

Acquire Device List

When retrieving device information for the first time, use selectDevice() to query the number of connected devices and obtain a list of all connected devices.

std::vector<TY_DEVICE_BASE_INFO> selected; ASSERT_OK( selectDevice(TY_INTERFACE_ALL, ID, IP, 1, selected) ); ASSERT(selected.size() > 0); TY_DEVICE_BASE_INFO& selectedDev = selected[0];

Open Interface

ASSERT_OK( TYOpenInterface(selectedDev.iface.id, &hIface) );

Open Device

ASSERT_OK( TYOpenDevice(hIface, selectedDev.id, &hDevice) );

Configure Components

Query Component Status

// Query components that are supported by the camera TY_COMPONENT_ID allComps; ASSERT_OK( TYGetComponentIDs(hDevice, &allComps) );

Configure Components and Set Features

After the device is opened, only the virtual component TY_COMPONENT_DEVICE is enabled by default.

// Enable the RGB component and configure RGB component features if(allComps & TY_COMPONENT_RGB_CAM && color) { LOGD("Has RGB camera, open RGB cam"); ASSERT_OK( TYEnableComponents(hDevice, TY_COMPONENT_RGB_CAM) ); //create a isp handle to convert raw image(color bayer format) to rgb image ASSERT_OK(TYISPCreate(&hColorIspHandle)); //Init code can be modified in common.hpp //NOTE: Should set RGB image format & size before init ISP ASSERT_OK(ColorIspInitSetting(hColorIspHandle, hDevice)); //You can call follow function to show color isp supported features // Enable the left IR component if (allComps & TY_COMPONENT_IR_CAM_LEFT && ir) { LOGD("Has IR left camera, open IR left cam"); ASSERT_OK(TYEnableComponents(hDevice, TY_COMPONENT_IR_CAM_LEFT)); } // Enable the right IR component if (allComps & TY_COMPONENT_IR_CAM_RIGHT && ir) { LOGD("Has IR right camera, open IR right cam"); ASSERT_OK(TYEnableComponents(hDevice, TY_COMPONENT_IR_CAM_RIGHT)); } // Enable the depth component and configure depth component features LOGD("Configure components, open depth cam"); DepthViewer depthViewer("Depth"); if (allComps & TY_COMPONENT_DEPTH_CAM && depth) { /// Configure depth component features (depth map resolution) TY_IMAGE_MODE image_mode; ASSERT_OK(get_default_image_mode(hDevice, TY_COMPONENT_DEPTH_CAM, image_mode)); LOGD("Select depth map Mode: %dx%d", TYImageWidth(image_mode), TYImageHeight(image_mode)); ASSERT_OK(TYSetEnum(hDevice, TY_COMPONENT_DEPTH_CAM, TY_ENUM_IMAGE_MODE, image_mode)); /// Enable the depth component ASSERT_OK(TYEnableComponents(hDevice, TY_COMPONENT_DEPTH_CAM)); /// Configure depth component features (scale unit) //depth map pixel format is uint16_t ,which default unit is 1 mm //the acutal depth (mm)= PixelValue * ScaleUnit float scale_unit = 1.; TYGetFloat(hDevice, TY_COMPONENT_DEPTH_CAM, TY_FLOAT_SCALE_UNIT, &scale_unit); depthViewer.depth_scale_unit = scale_unit; }

Manage Frame Buffers

Note

Before performing frame buffer management, ensure that the required components have been enabled via the TYEnableComponents() interface, and the correct image format and resolution have been set via the TYSetEnum() interface. This is because the size of the frame buffer depends on these settings; otherwise, you may encounter issues with insufficient frame buffer space.

// Query frame buffer size for current camera component configurations

LOGD("Prepare image buffer");

uint32_t frameSize;

ASSERT_OK( TYGetFrameBufferSize(hDevice, &frameSize) );

LOGD(" - Get size of framebuffer, %d", frameSize);

// Allocate frame buffers

LOGD(" - Allocate & enqueue buffers");

char* frameBuffer[2];

frameBuffer[0] = new char[frameSize];

frameBuffer[1] = new char[frameSize];

// Enqueue frame buffers

LOGD(" - Enqueue buffer (%p, %d)", frameBuffer[0], frameSize);

ASSERT_OK( TYEnqueueBuffer(hDevice, frameBuffer[0], frameSize) );

LOGD(" - Enqueue buffer (%p, %d)", frameBuffer[1], frameSize);

ASSERT_OK( TYEnqueueBuffer(hDevice, frameBuffer[1], frameSize) );

Register Callback Function

TYRegisterEventCallback

Register an event callback function. When an exception occurs, the system calls the function registered via TYRegisterEventCallback. The following example demonstrates a callback function that includes exception handling for reconnection scenarios.

static bool offline = false;

void eventCallback(TY_EVENT_INFO *event_info, void *userdata)

{

if (event_info->eventId == TY_EVENT_DEVICE_OFFLINE) {

LOGD("=== Event Callback: Device Offline!");

// Note:

// Please set TY_BOOL_KEEP_ALIVE_ONOFF feature to false if you need to debug with breakpoint!

offline = true;

}

}

int main(int argc, char* argv[])

{

LOGD("Register event callback");

ASSERT_OK(TYRegisterEventCallback(hDevice, eventCallback, NULL))

while(!exit && !offline) {

//Fetch and process frame data

}

if (offline) {

//Release resources

TYStopCapture(hDevice);

TYCloseDevice(hDevice);

// Can try re-open and start device to capture image

// or just close interface exit

}

return 0;

}

Set Work Mode

Configure the depth camera’s work mode according to actual needs. For steps on how to set the camera to other work modes, refer to Work Mode Settings.

// Check if the camera supports setting work mode

bool hasTrigger;

ASSERT_OK(TYHasFeature(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_TRIGGER_PARAM_EX, &hasTrigger));

if (hasTrigger) {

// Set depth camera to a work mode 0

LOGD("Disable trigger mode");

TY_TRIGGER_PARAM_EX trigger;

trigger.mode = TY_TRIGGER_MODE_OFF;

ASSERT_OK(TYSetStruct(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_TRIGGER_PARAM_EX, &trigger, sizeof(trigger)));

}

Start Image Capture

LOGD("Start capture");

ASSERT_OK( TYStartCapture(hDevice) );

Fetch Frame Data

LOGD("While loop to fetch frame");

bool exit_main = false;

TY_FRAME_DATA frame;

int index = 0;

while(!exit_main) {

int err = TYFetchFrame(hDevice, &frame, -1);

if( err == TY_STATUS_OK ) {

LOGD("Get frame %d", ++index);

int fps = get_fps();

if (fps > 0){

LOGI("fps: %d", fps);

}

cv::Mat depth, irl, irr, color;

parseFrame(frame, &depth, &irl, &irr, &color, hColorIspHandle);

if(!depth.empty()){

depthViewer.show(depth);

}

if(!irl.empty()){ cv::imshow("LeftIR", irl); }

if(!irr.empty()){ cv::imshow("RightIR", irr); }

if(!color.empty()){ cv::imshow("Color", color); }

int key = cv::waitKey(1);

switch(key & 0xff) {

case 0xff:

break;

case 'q':

exit_main = true;

break;

default:

LOGD("Unmapped key %d", key);

}

TYISPUpdateDevice(hColorIspHandle);

LOGD("Re-enqueue buffer(%p, %d)"

, frame.userBuffer, frame.bufferSize);

ASSERT_OK( TYEnqueueBuffer(hDevice, frame.userBuffer, frame.bufferSize) );

}

}

Stop Capture

ASSERT_OK( TYStopCapture(hDevice) );

Close Device

// Close Device

ASSERT_OK( TYCloseDevice(hDevice));

// Release interface handle

ASSERT_OK( TYCloseInterface(hIface) );

ASSERT_OK(TYISPRelease(&hColorIspHandle));

Release API

// Unload Library

ASSERT_OK( TYDeinitLib() );

// Free Allocated Memory Resources

delete frameBuffer[0];

delete frameBuffer[1];